- Everyday AI

- Posts

- INNOVATORS vs. ALARMISTS

INNOVATORS vs. ALARMISTS

The War Over AI's Future

INNOVATORS vs. ALARMISTS: The War over AI's Future

AI Leaders Call for a Pause as Primitive AGI emerges and Deepmind/Google teams join forces.

The Big Stuff

March 2023 may go down as one of the most innovative months in human history. Innovation has been accelerating rapidly, and shows no sign of slowing down. This has left many people to wonder, "What happens next?" It seems everyone is wondering this, including those in the AI community. These are big changes, and will no doubt have huge ramifications on society, so how do we deal with them, and how do we deal with AI accelerating so quickly?

There are two main camps forming on the spectrum: those who want to slow things down and evaluate the societal consequences (broadly referred to as "EA", or on the far end of the spectrum, "Doomers"), and those who believe that we should accelerate innovation, and there are dynamic systems already in place to mitigate risk (broadly referred to as "e/acc", or "Effective Acceleration"). Disclaimer: the authors of this newsletter are firmly in the e/acc camp, so keep this in mind.

This week, the Doomers have suggested we stop and discuss some of the biggest issues in an open letter, Pause Giant AI Experiments: An Open Letter. The petition has 2827 signatures, some confirmed, and some not (such as Yann LeCunn, AI leader at Meta). The letter argues that contemporary AI systems are becoming competitive at general tasks, raising concerns about their potential impact on jobs, information channels, and human control. The authors argue that it is crucial that powerful AI systems be developed only when their positive effects and manageable risks are well justified, with independent review and limits on compute growth for new models. They call for a SIX MONTH PAUSE of AI training models larger than GPT-4 while some of these questions can be debated and evaluated.

Jobs: Who's at risk and how fast?

Many of you may have read a lot of articles this week about AI claiming 85 million jobs. This number comes from The Future of Jobs Report 2020 by the World Economic Forum. However, what some articles leave out is that the same report claims that 97 million jobs will be created. Meanwhile, Nobel Laureate, Paul Krugman writes, A.I. May Change Everything, but Probably Not Too Quickly (paywall), and argues that large language models (LLM) such as ChatGPT will take jobs, but it will take a longer than we think. Sam Altman thinks it will take certain jobs faster than others, such as customer service (interview link). Meanwhile, OpenAI conducted a research paper with the University of Pennsylvania. The study suggest that 49% of workers could have half or more of their tasks exposed to LLMs.

As we consider the implications of AI on the future of work, a crucial question arises: will the proliferation of AI lead to fewer jobs or greater productivity? The answer remains uncertain at this point. However, it is worth noting that similar concerns emerged during the onset of the last three industrial revolutions. Despite these fears, we have managed to overcome each of these waves and witnessed consistently low levels of unemployment. One possible explanation for this is the difficulty in predicting the new job opportunities that will arise in the future. Many jobs that exist today were non-existent in 1940, and numerous positions that exist today did not exist ten years ago. Ultimately, capitalism aims to produce more goods at a lower cost, and AI has the potential to deliver just that.

The Great RenAIssance?

By amplifying human intelligence, AI may cause a new Renaissance, perhaps a new phase of the Enlightenment.

But prophecies of AI doom are also causing a new form of medieval obscurantism.— Yann LeCun (@ylecun)

4:27 PM • Apr 1, 2023

Safety: Mitigating Risk as Innovation Accelerates

There have also been a lot of safety debates with the likes of Eliezer Yudkowsky openly stating this week in a Time Magazine article that Pausing AI Developments Isn't Enough. We Need to Shut it All Down, going as far to suggest that we should form international treaties, and use the military to destroy any datacenters harboring GPUs should a country violate it. "Yud", as he's known, has been beating the safety drum hard, stating We're all going to die!.While Yud's doomer story is great for clicks, it's not grounded in reality. He claims that an AGI (AI as smart as a human), could make up the genetic code for a new virus and email it to a lab to be built, therefore wiping out humanity. But even if that were possible, and even if the lab built the new genetic material, how would it then get distributed? There are a ton of steps missing here, and Yud is forgetting how many safety systems already exist.For example, AI-based self-driving already goes through a certification process. AI-assisted medical imaging goes through safety systems as well. Medical labs have safety systems in place. Even companies, such as OpenAI, have embedded safety teams.

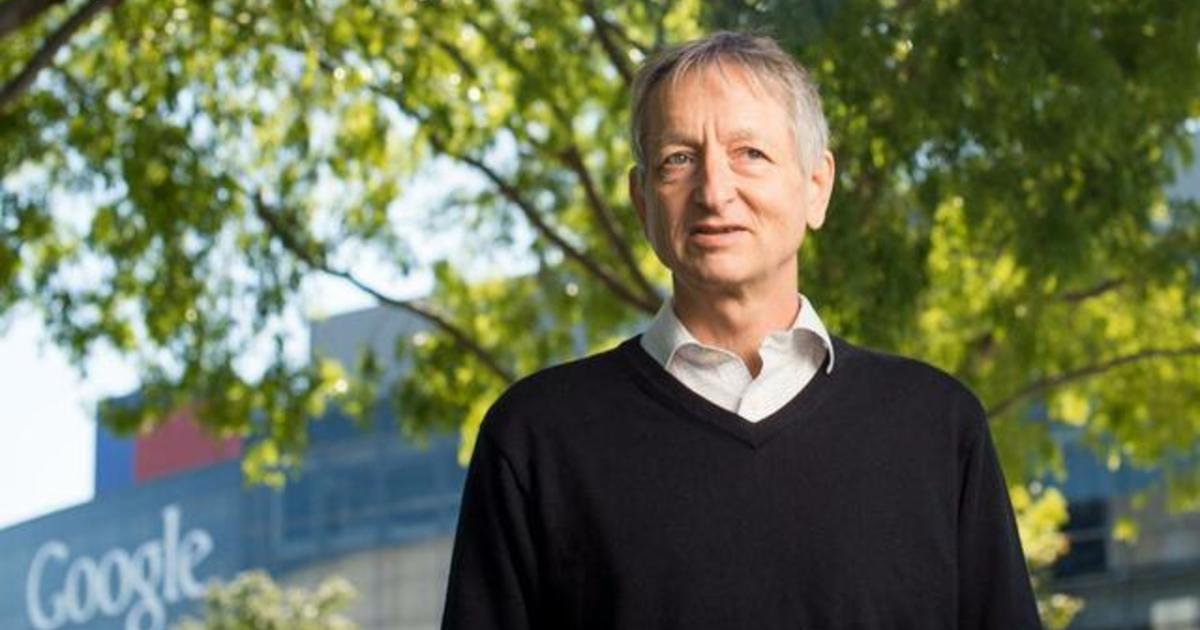

We argue that discussing safety for systems that do not yet exist is premature. To quote Yann LeCun, it's like "devoting enormous amounts of energy to discuss aircraft safety before we know how to build airplanes."

In summary, we urge everyone to stay calm and carry on. Yes, there's going to be a lot of change, but humans have made it this far because our superpower is adapting.

Google Merges Two Top AI Teams & Replit Partnership

After Google's embarrassing AI presentation, and Bard's flop, Google has really been feeling pressure to accelerate quickly. This week, Google merged two of the world's top AI teams: Deepmind, and Google Brain AI. This change was made to accelerate progress against OpenAI.Google also announced a partnership with Replit. This will bring Replit's toolset to Google's Cloud Platform.

The Rise of the Domain-Specific GPT - Bloomberg Releases BloombergGPT

This week, Bloomberg released BloombergGPT, a 50B parameter size model that's purpose built for finance. The model will help sentiment analysis (is this statement positive or negative), named entity recognition (e.g. "GM" is a company), news classification, and question answering.This is very interesting news and we believe it signals a new movement: domain specific GPTs. We think there will be more here in the future, for example, LexisNexisGPT for legal research, BioMedGPT for medical, etc.

AI Open Source: Big Moves

The open source community is releasing a slew of tools to help accelerate domain-specific LLM development. This week, there were a number of exciting releases. LAION, released Open Flamingo, which includes many of the tools you would need to craft your own LLM, including the C4 dataset, which was used to train Google's T5 models.Cerebras-GPT released seven open source GPT models, with sizes ranging from 111M to 13B parameters. Some of the smaller models will be suitable for embedding. This makes it possible to put an LLM in a very small electronic. This could have a wide-ranging series of use cases, such as embedding in consumer products. Imagine being able to ask your car questions in natural language.An open-source chatbot, Vicuna was also released for non-commercial use. The framework claims 90% ChatGPT quality with a relatively small, 13B parameter model with only a $300 price tag for training.Meanwhile, 5,000 AI enthusiasts met in San Francisco this weekend for an open source meetup sponsored by Hugging Face for "Woodstock AI". Several people reported a level of energy and excitement they hadn't seen in the last two tech cycles (link).

Primitive AGIs: Are we closer than we thing to Artificial General Intelligence?

Seattle-based Yohei Nakajima, created a prototype "AGI". Given a goal, the system can complete tasks, generate new tasks, and prioritize tasks in real-time. It demonstrates the power of large language models to start running as autonomous agents. Check out this Twitter thread. Meanwhile, Toran Richards created a similar system with AutoGPT.

Is this an example of AGI? It may not meet most people's definition of AGI, but could it be considered a primitive form of AGI? We argue that it can! The system, while still in its early stages, demonstrates many of the characteristics that are expected of AGI, such as the ability to reason, plan, learn, communicate in natural language, and act, albeit in a primitive manner. In a recent Twitter post, user Ate-a-Pi compared Yohei's architecture with several of the systems that Yann LeCun had stated would be necessary for AGI (see post).

One more thing...

Smaller But Still Cool Things:

Five generative AI text-to-video models have released in the last two weeks

Watch an AI Chatbot pass a data science interview given by two NYU professors

Draw a user interface in ASCII art, and GPT-4 will implement it

Going Deeper

Tweets of the Week

I don’t think people realize what ChatGPT plugins will become.

It’s a whole new internet protocol.

Over time, every site on the internet will have an API and an ai-plugin.json file.

AI will have an internet-wide map with instructions for how to interface with the world.

— Mckay Wrigley (@mckaywrigley)

3:58 PM • Mar 28, 2023

Pretty cool GPT4 application - draw ASCII circuit topologies (I was messing around with some HDLs)

— Kevin Fischer (@KevinAFischer)

7:51 PM • Apr 2, 2023

How is everyone dealing with the rapid pace of AI?

Based on DMs and some group chats I'm in, many in Silicon Valley seem worried and are questioning what to do with their lives now that everything is changing.

— Nathan Lands (@NathanLands)

4:30 PM • Mar 29, 2023

"Will Smith eating spaghetti" generated by Modelscope text2video

credit: u/chaindrop from r/StableDiffusion

— Magus Wazir (@MagusWazir)

3:25 AM • Mar 28, 2023

This is fun: Bing does process re-engineering for fictional evil organizations.

You are a management consultant, analyze the org chart of Mordor. How would you improve it to make it more flexible in winning in today's changing Middle Earth? Provide KPIs.

Write a re-org memo.

— Ethan Mollick (@emollick)

2:40 AM • Mar 28, 2023

"any sufficiently advanced technology is indistinguishable from magic" ✨ p sure i've never said this about anything we've made.

meet uncle rabbit—the first conversational holographic ai being powered by #ChatGPT@OpenAI powered by @LKGGlass

(hey @DBtodomundo 👋🏼)

— nikki ◕ ᴗ ◕ (@nikkiccccc)

4:51 PM • Mar 29, 2023

A guy named altman building the alternative to man

— aidan (@Aidan_Wolf)

5:01 AM • Mar 26, 2023

Eye Candy

With Adobe making generative AI "safe for business," here are some jewels we discovered from artists using the new tool...

I made a tiny comic book using #AdobeFirefly

It's great at keeping consistent characters! I recorded a tutorial of my process and will share it with you shortly.— Kris Kashtanova (@icreatelife)

6:14 PM • Mar 31, 2023

Using #AdobeFirefly to create cute 3D characters can be addictive 😎

Prompt: "a cute 3D character made of [insert material]"

Don't include anything describing the setting or background if you want a clean studio style portrait.

— Lee Brimelow (@leebrimelow)

11:57 PM • Mar 29, 2023

My #adobefirefly#robot#puppy with a bit of animation manually added @AdobeVideo@jnack@AdobeLive_

— Chris Georgenes (@keyframer)

2:57 AM • Mar 31, 2023

a decent patch variant. I can't wait until I have more control over the outcome in Adobe Firefly.

— IronFella🇺🇦 (@RobotUnderlord)

8:00 PM • Apr 2, 2023

Moon Flowers with #AdobeFirefly

+

#ai#scifiart— Karoline Georges (@KarolineGeorges)

9:20 PM • Mar 28, 2023

Do you have 30 seconds for a quick survey to help us improve Everday AI?

We'd love your feedback! Click here.

Do you like what you're reading? Share it with a friend.